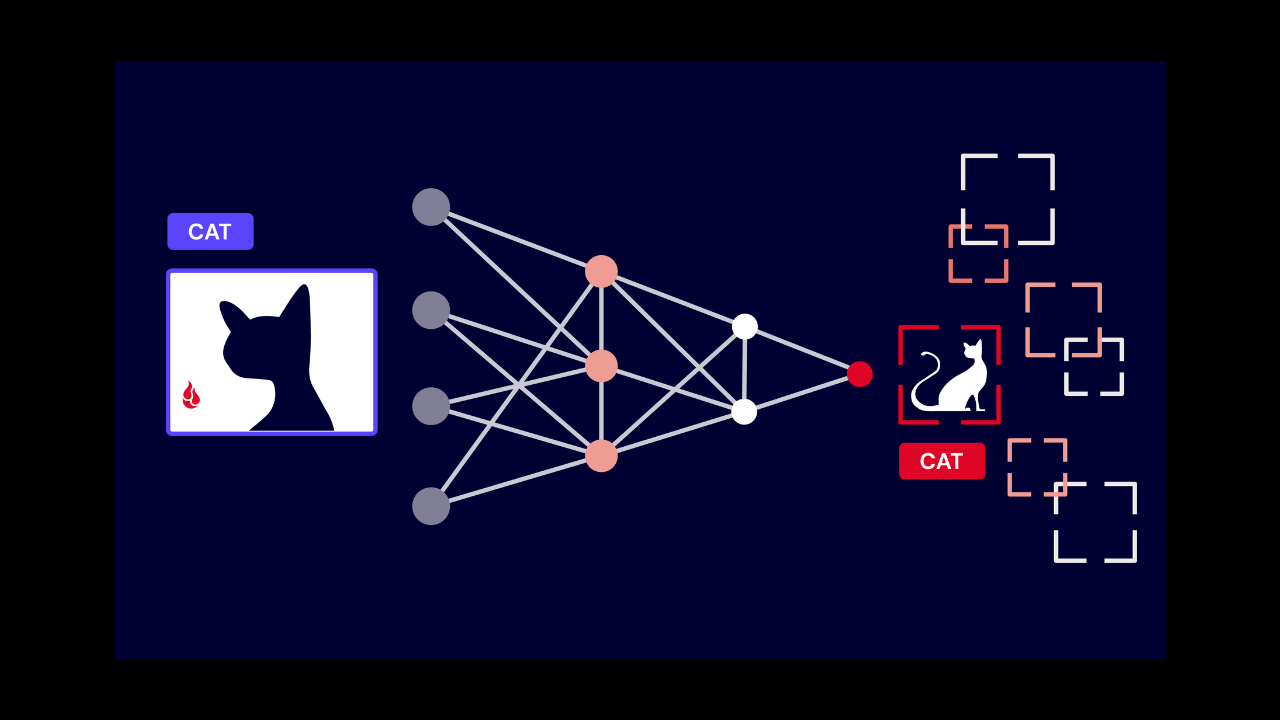

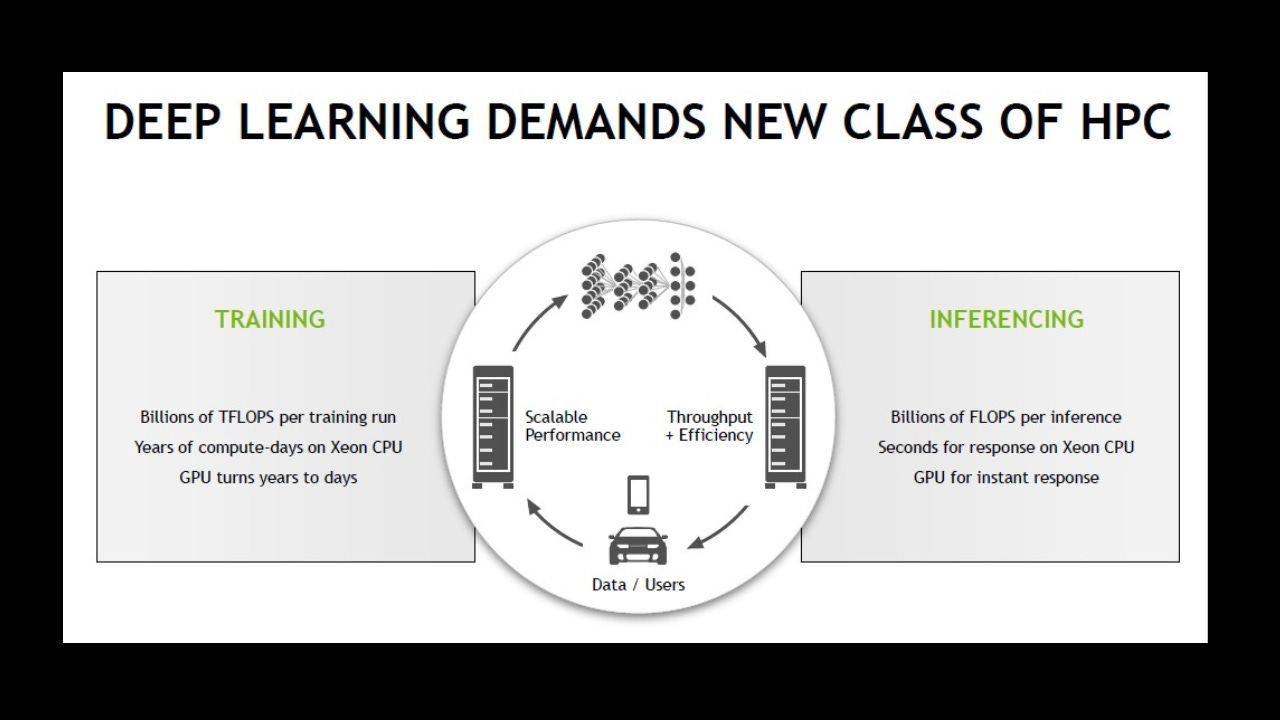

Artificial intelligence training and inference form the foundation of modern machine learning applications. AI training is the phase where a model learns from a vast amount of data, identifying patterns, correlations, and statistical relationships that allow it to make predictions or decisions. During this period, the model is presented with labeled examples, adjusting its internal parameters through iterative optimization processes such as gradient descent. The goal of training is to create a model that generalizes well, meaning it can accurately predict outcomes for new, unseen data rather than merely memorizing the training examples.

Once training is complete, AI inference begins. Inference is the real-world application of the trained model. The model encounters new data and produces outputs based on its previous learning. Inference is the mechanism by which AI proves its usefulness, demonstrating the ability to generalize and act intelligently in dynamic, unpredictable environments.

The hardware requirements for AI inference vary depending on the complexity of the model and the speed at which predictions are needed. Powerful GPUs are often employed for models requiring high throughput and low latency, such as real-time video analysis. Lighter applications, such as mobile apps or IoT devices, may use CPUs or edge AI accelerators designed for efficiency and speed in constrained environments. Specialized chips like Tensor Processing Units (TPUs) or TensorRT engines optimize performance specifically for inference tasks, ensuring quicker response times and reduced energy consumption.

The importance of inference extends across many critical industries. In healthcare, AI systems assist in diagnosing diseases by analyzing medical images or patient data with remarkable speed and accuracy. In finance, inference enables real-time fraud detection by flagging suspicious transactions instantly. Autonomous vehicles rely heavily on inference to process sensor data in real-time, making rapid decisions that ensure passenger and pedestrian safety.

The effectiveness of AI inference is tied to several factors: the quality and diversity of the training data, the robustness of the model architecture, and the fine-tuning performed post-training. A model trained on a biased or narrow dataset will likely produce unreliable results when encountering diverse real-world scenarios. Careful preparation of training datasets and thoughtful design of model architecture are essential prerequisites for successful inference.

As AI systems grow more complex, techniques such as model quantization, pruning, and knowledge distillation are employed to streamline inference. These methods reduce the computational demands of the model without sacrificing significant accuracy, enabling deployment on devices with limited processing power or strict energy constraints.

In a world increasingly driven by data and automation, mastering both AI training and inference is indispensable. Training provides the foundation, and inference represents the application—the ultimate test of whether the artificial intelligence created can perform meaningful, reliable tasks in real-world conditions. Through proper training, optimization, and thoughtful deployment, AI systems offer unparalleled benefits across every sector of modern society.

The growing sophistication of AI models demands increasingly efficient inference mechanisms. As AI continues to integrate into daily operations across industries, the necessity for real-time decision-making becomes paramount. Inference systems must not only be fast but also reliable under varying conditions, adapting to changes without human intervention. This demand has spurred innovations such as edge computing, where inference is conducted closer to the data source, reducing latency and preserving bandwidth.

Edge AI empowers devices like smartphones, surveillance cameras, and industrial sensors to make instant decisions without reliance on centralized cloud systems. This decentralization of intelligence enhances privacy, reduces operational costs, and ensures greater system resilience. For instance, in critical environments such as autonomous driving, every millisecond counts; an inference system capable of processing visual and spatial data at the edge can mean the difference between safety and disaster.

The architecture of models designed for inference must strike a balance between complexity and efficiency. Deep learning models with billions of parameters offer high accuracy but at the cost of speed and energy consumption. Techniques such as model compression, neural architecture search, and transfer learning have emerged to address these challenges. By simplifying models without severely impacting performance, engineers can deploy AI systems in real-world environments where computational resources are finite.

Training and inference are inherently interlinked. A model poorly trained will falter at the inference stage, leading to incorrect decisions that could have serious consequences, especially in critical fields like medical diagnosis or financial forecasting. Consequently, investment in robust training pipelines, including techniques such as cross-validation, data augmentation, and adversarial training, is vital. Properly trained models are better suited for real-world inference, where the variability of input data demands resilience and adaptability.

Evaluation metrics for inference performance extend beyond simple accuracy. Metrics such as latency, throughput, energy efficiency, and model robustness under adversarial conditions are equally important. A model that is highly accurate but slow and resource-intensive may be unsuitable for deployment. Thus, the measure of a successful AI system is not merely its intelligence but its practical utility in live environments.

Another key development in AI inference is the concept of continual learning, where models update themselves as new data becomes available, reducing the need for frequent retraining from scratch. This dynamic adaptation ensures that inference remains accurate over time, even as the external environment shifts. Fields such as cybersecurity, where threats constantly evolve, benefit enormously from such adaptive inference systems.

Training and inference together form a cycle of intelligence: one provides the foundation, the other delivers results. Mastery over both processes ensures that artificial intelligence serves its intended role, not merely as a theoretical concept but as a powerful, practical tool woven into the fabric of human advancement.

Understanding AI training and inference is critical for users at all levels because it shapes their expectations, informs their trust, and guides their practical use of AI technologies. Without grasping these foundational processes, users risk misunderstanding the capabilities and limitations of AI systems.

For general users, knowing the difference between training and inference fosters realistic expectations. Many mistakenly believe AI can think or reason like a human mind; in truth, it operates based on patterns learned during training. When users comprehend that inference is the application of pre-learned knowledge rather than spontaneous creativity, they approach AI outputs with proper scrutiny and discernment.

For professionals deploying AI, an understanding of training and inference is essential for making informed choices. They must assess whether a model is appropriately trained for their specific application and whether its inference speed, accuracy, and resource needs fit within operational constraints. Without this knowledge, poor system design, costly errors, and ineffective deployments are likely.

For policymakers and decision-makers, a firm grasp of AI training and inference guards against both unwarranted fear and blind enthusiasm. Laws and guidelines surrounding AI use must be based on its real capabilities. Misunderstanding the nature of AI inference could lead to either overregulation, stifling innovation, or underregulation, risking public safety and privacy.

For developers and engineers, mastery of training and inference is the very foundation of their craft. They must design, optimize, and evaluate models with full awareness of how training quality impacts inference outcomes and how inference must align with the practical needs of the end users.

At every level, knowledge of these processes encourages responsible use of AI, fosters better human-AI interaction, and ensures that the enormous potential of artificial intelligence is realized with caution, precision, and integrity.

Training and inference are not technical footnotes but the pillars upon which all artificial intelligence stands. For users, developers, and leaders alike, understanding these processes ensures that AI is approached not as a mysterious force, but as a crafted tool governed by human intelligence and discipline. Mastery of these concepts strengthens the wise application of AI, upholds accountability, and secures the benefits of technology while guarding against its misuse.

Or,

BTC donations (use your BTC wallet to scan or this address: 399hePnn5L1LnfTHGE9ZV8BQwFz5CLJXsF)

Other ways to support my work (use your phone scanner)

Share this post